Ulrike Uhlig: How do kids conceive the internet?

I wanted to understand how kids between 10 and 18 conceive the internet.

Surely, we have seen a generation that we call digital natives grow up with

the internet. Now, there is a younger generation who grows up with pervasive

technology, such as smartphones, smart watches, virtual assistants and so on.

And only a few of them have parents who work in IT or engineering

I wanted to understand how kids between 10 and 18 conceive the internet.

Surely, we have seen a generation that we call digital natives grow up with

the internet. Now, there is a younger generation who grows up with pervasive

technology, such as smartphones, smart watches, virtual assistants and so on.

And only a few of them have parents who work in IT or engineering

Pervasive technology contributes to the idea that the internet is immaterial

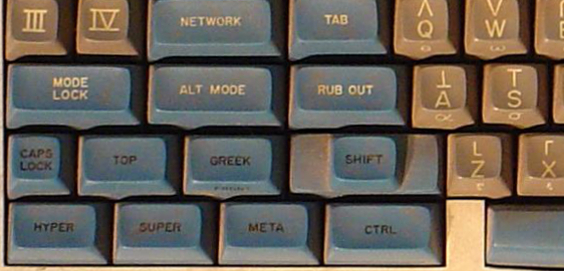

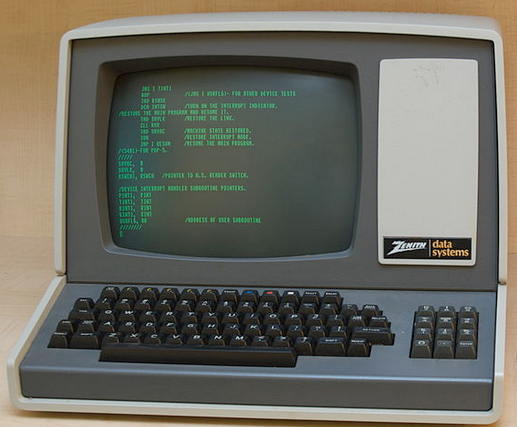

With their search engine website design, Google has put in place an extremely

simple and straightforward user interface. Since then, designers and

psychologists have worked on making user interfaces more and more intuitive to

use. The buzzwords are usability and user experience design . Besides this

optimization of visual interfaces, haptic interfaces have evolved as well,

specifically on smartphones and tablets where hand gestures have replaced more

clumsy external haptic interfaces such as a mouse. And beyond interfaces, the

devices themselves have become smaller and slicker. While in our generation

many people have experienced opening a computer tower or a laptop to replace

parts, with the side effect of seeing the parts the device is physically

composed of, the new generation of end user devices makes this close to

impossible, essentially transforming these devices into black boxes, and

further contributing to the idea that the internet they are being used to

access with would be something entirely intangible.

What do kids in 2022 really know about the internet?

So, what do kids of that generation really know about the internet, beyond

purely using services they do not control? In order to find out, I decided to

interview children between 10 and 18.

I conducted 5 interviews with kids aged 9, 10, 12, 15 and 17, two boys and

three girls. Two live in rural Germany, one in a German urban area, and two

live in the French capital.

I wrote the questions in a way to stimulate the interviewees to tell me a story

each time. I also told them that the interview is not a test and that there are

no wrong answers.

Except for the 9 year old, all interviewees possessed both, their own

smartphone and their own laptop. All of them used the internet mostly for

chatting, entertainment (video and music streaming, online games), social media

(TikTok, Instagram, Youtube), and instant messaging.

Let me introduce you to their concepts of the internet. That was my first story

telling question to them:

If aliens had landed on Earth and would ask you what the internet is, what would you explain to them?

The majority of respondents agreed in their replies that the internet is

intangible while still being a place where one can do anything and

everything .

Before I tell you more about their detailed answers to the above question, let

me show you how they visualize their internet.

If you had to make a drawing to explain to a person what the internet is, how would this drawing look like?

Each interviewee had some minutes to come up with a drawing.

As you will see, that drawing corresponds to what the kids would want an

alien to know about the internet and how they are using the internet

themselves.

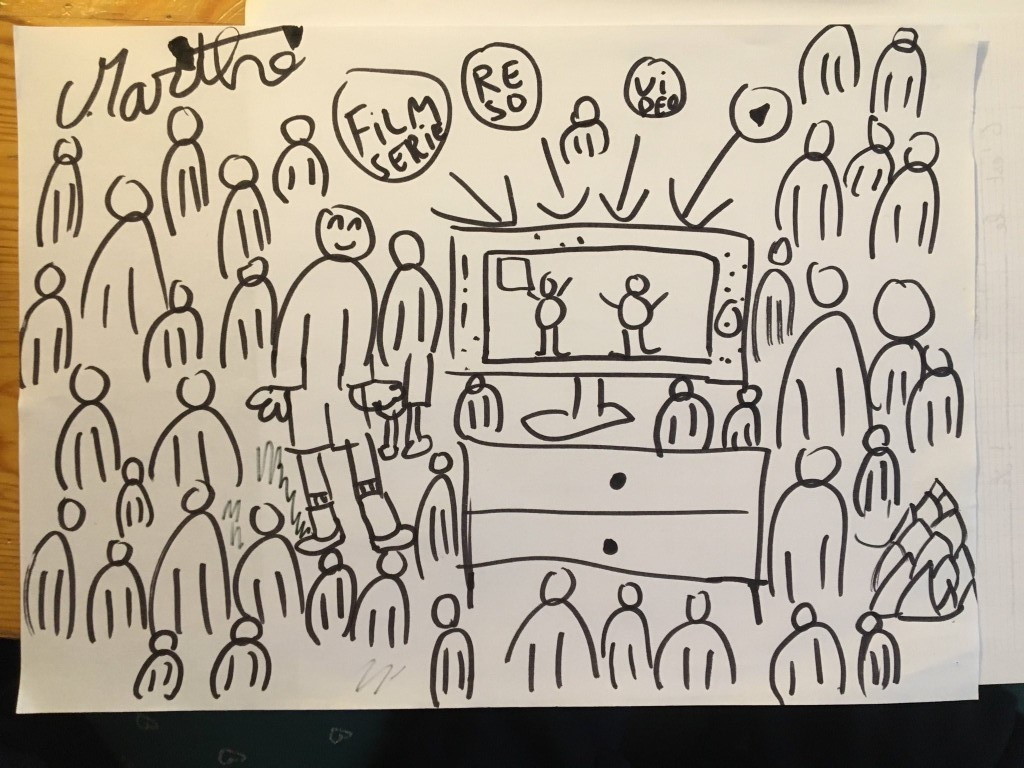

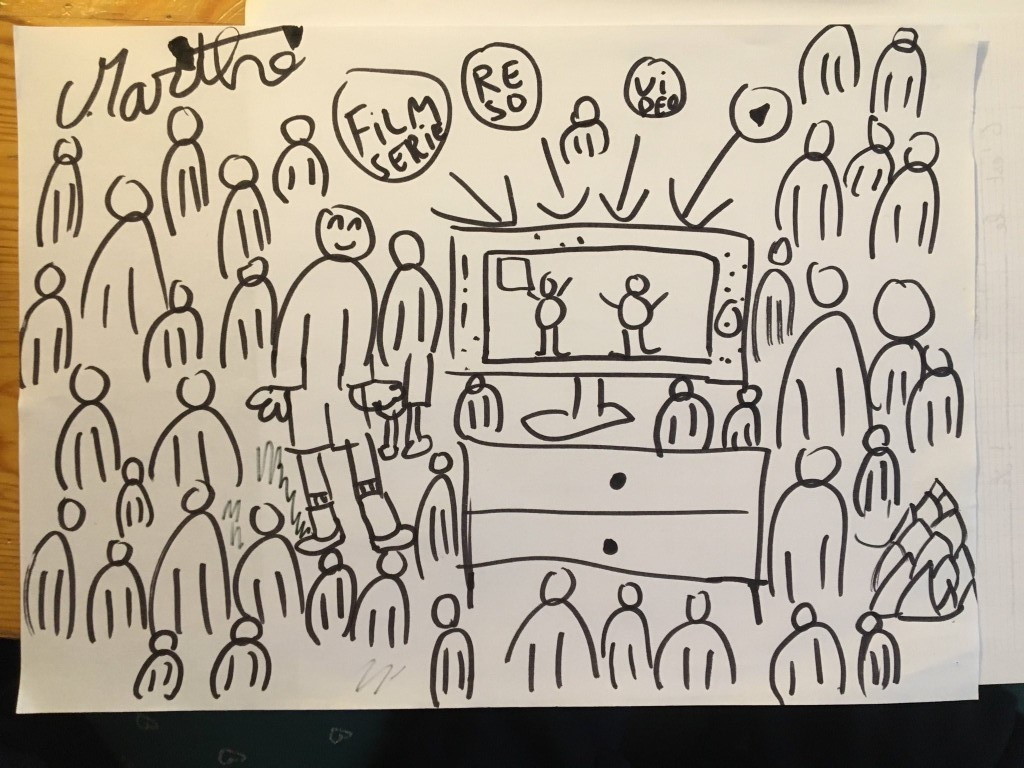

Movies, series, videos

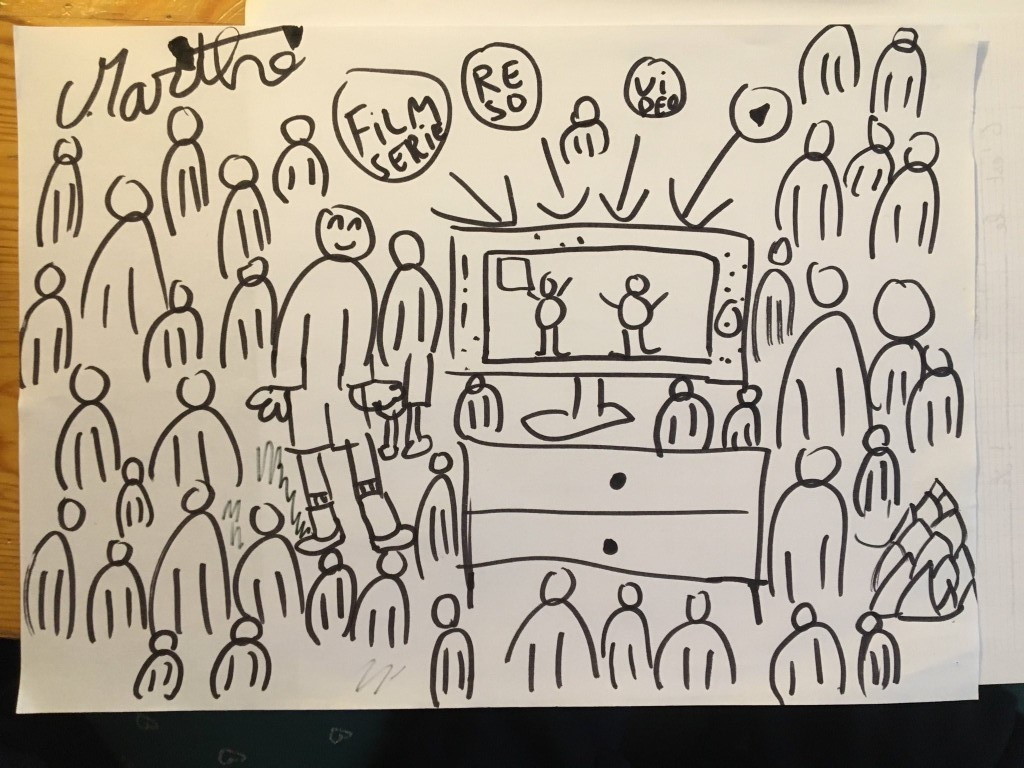

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

"Internet is a er one cannot touch it it s an, er [I propose

the word idea ], yes it s an idea. Many people use it not necessarily to

watch things, but also to read things or do other stuff."

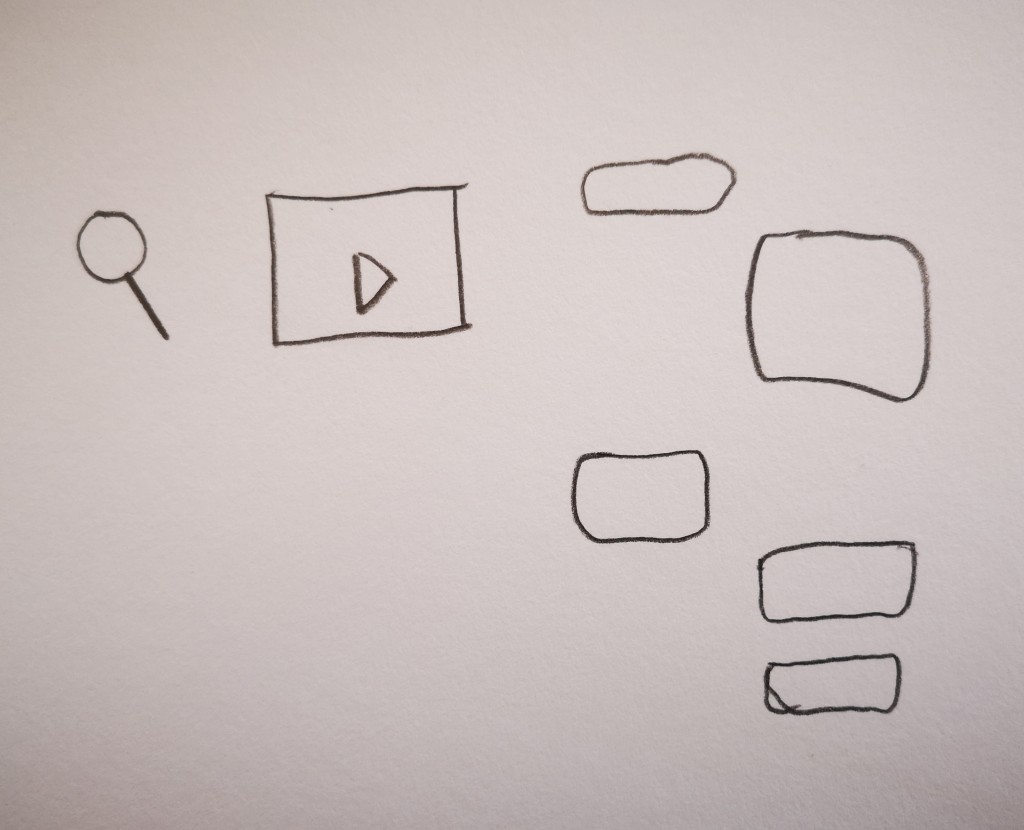

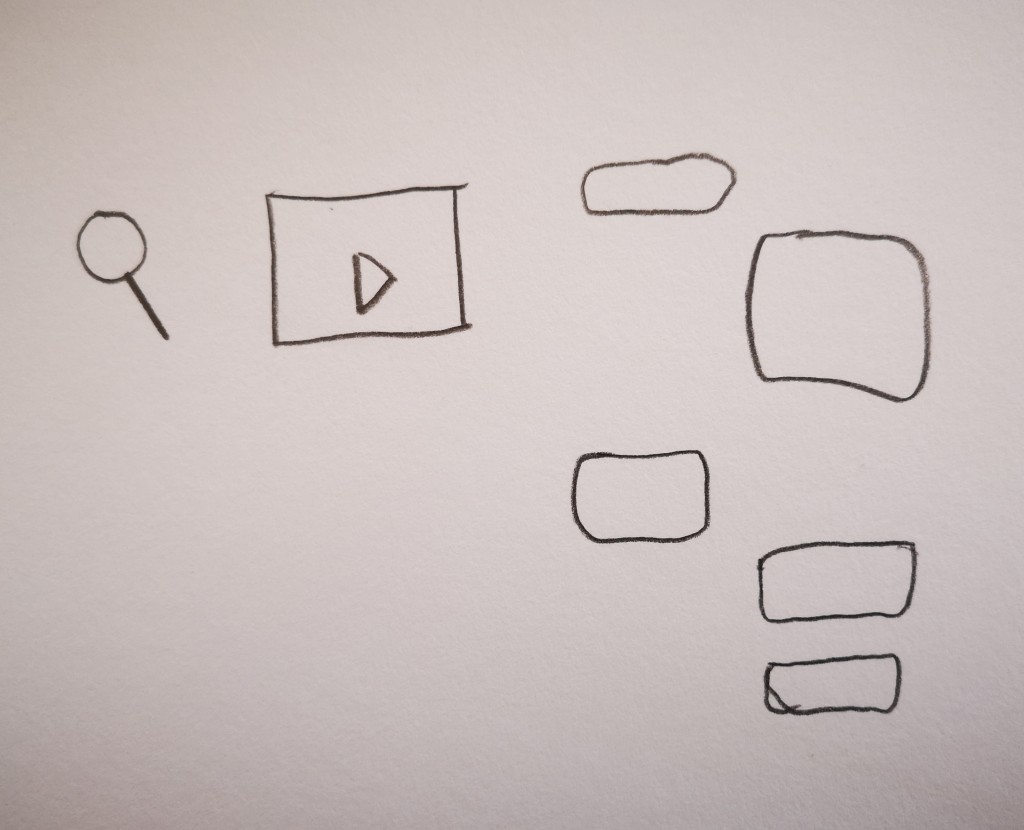

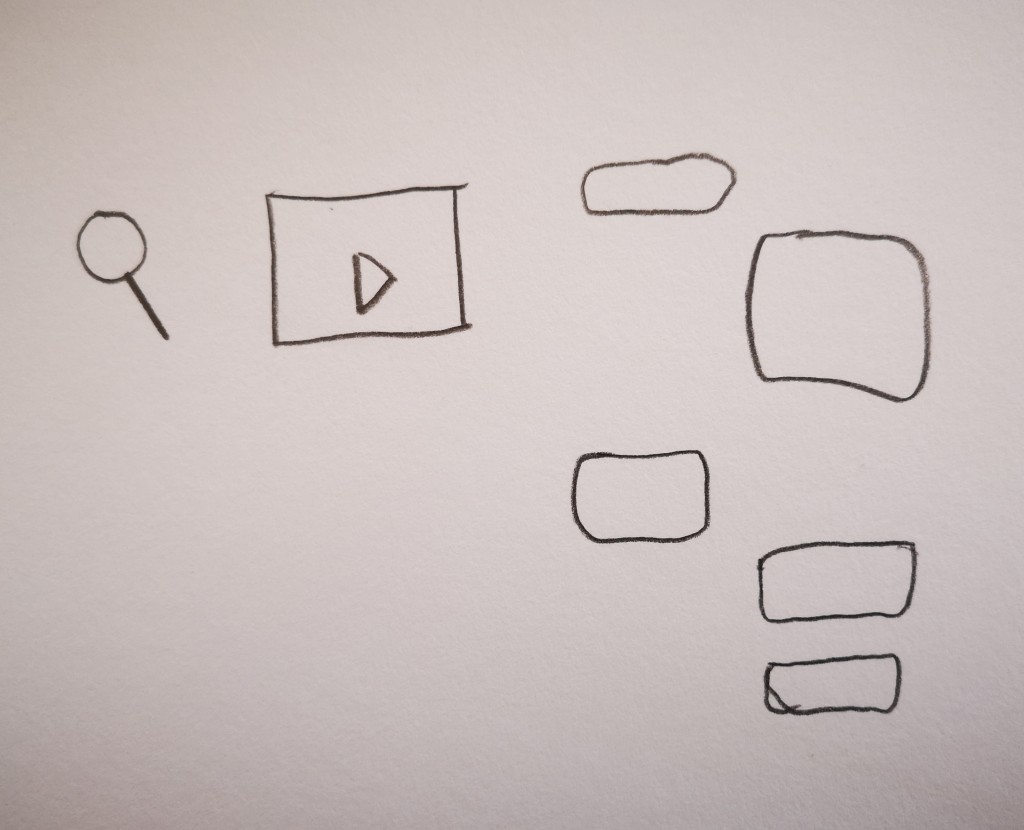

User interface elements

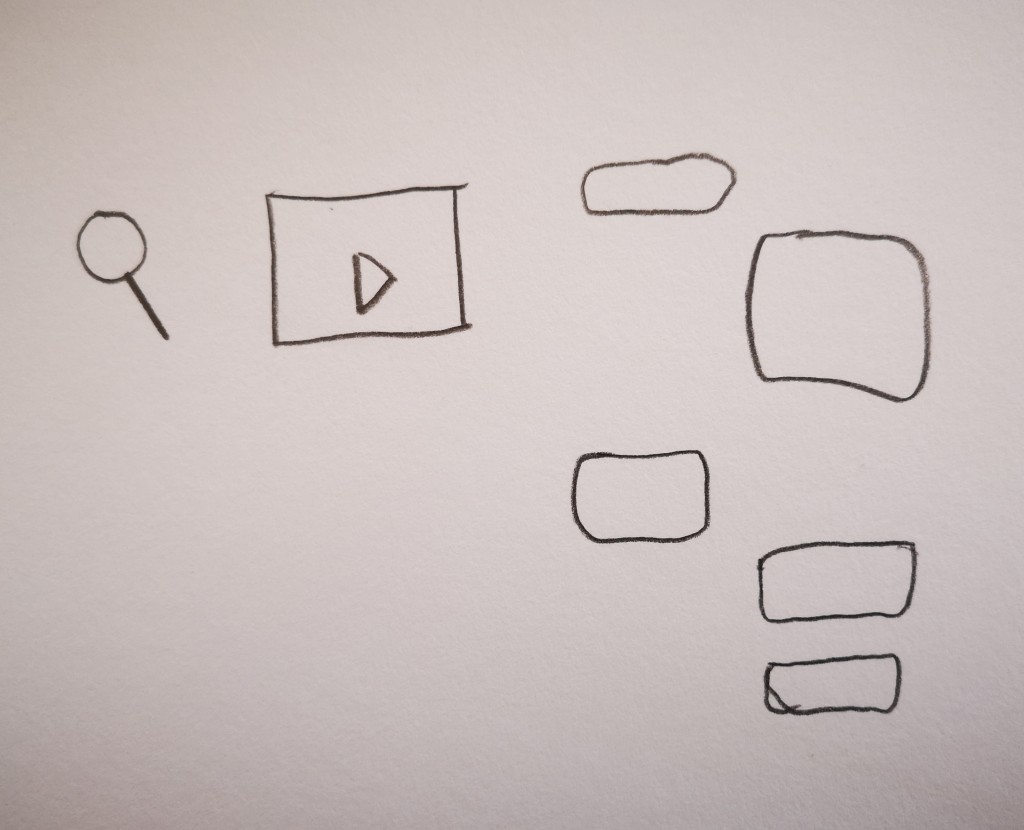

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

"You can use the internet to learn things or get information,

listen to music, watch movies, and chat with friends. You can do nearly

anything with it."

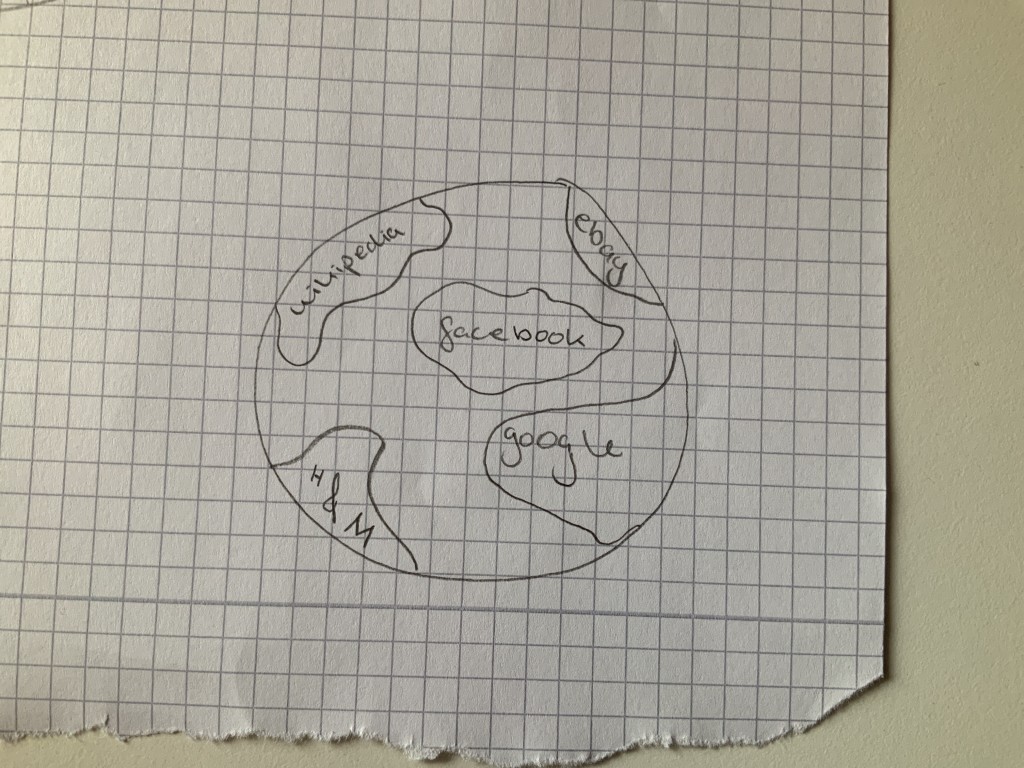

Another planet

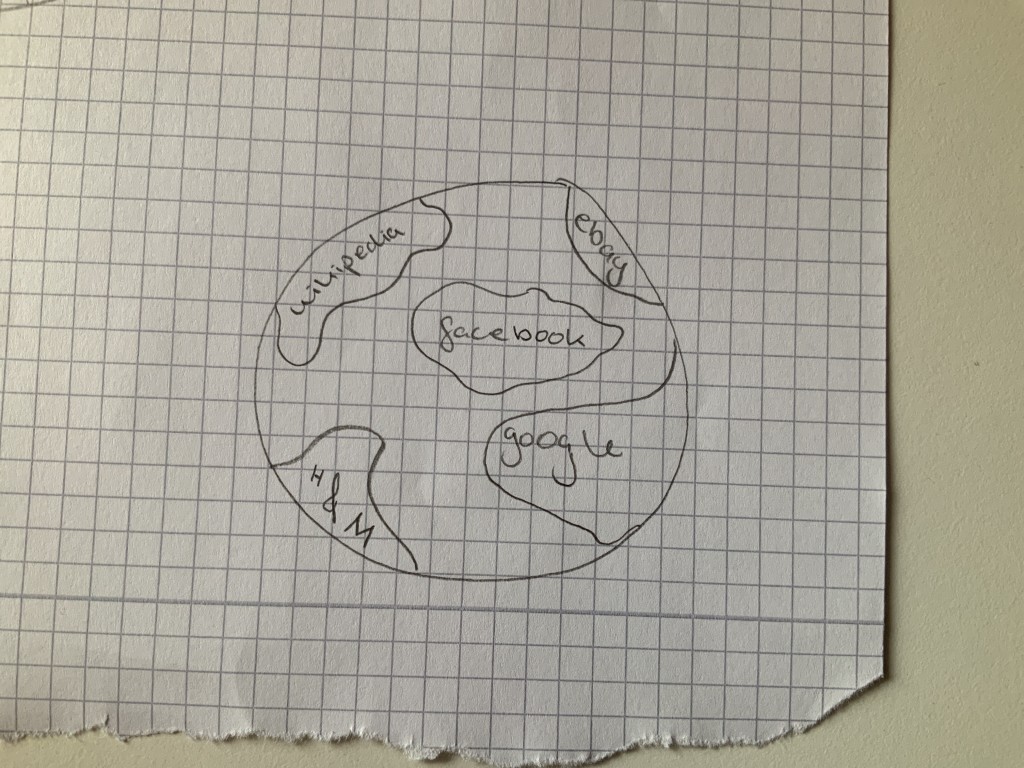

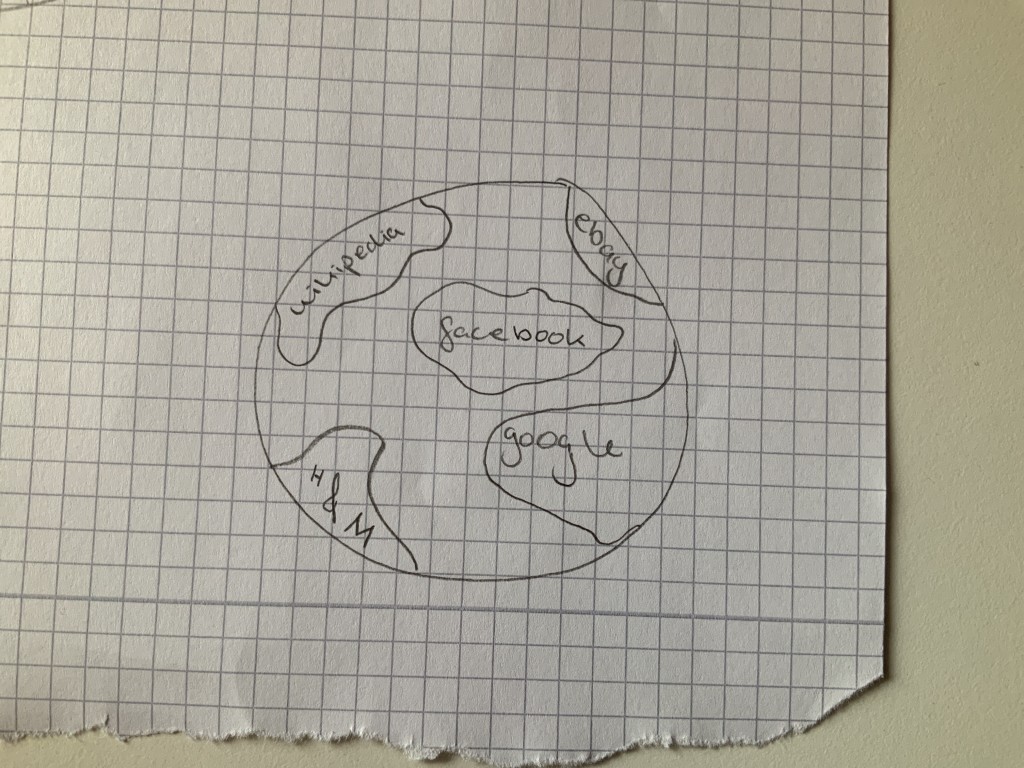

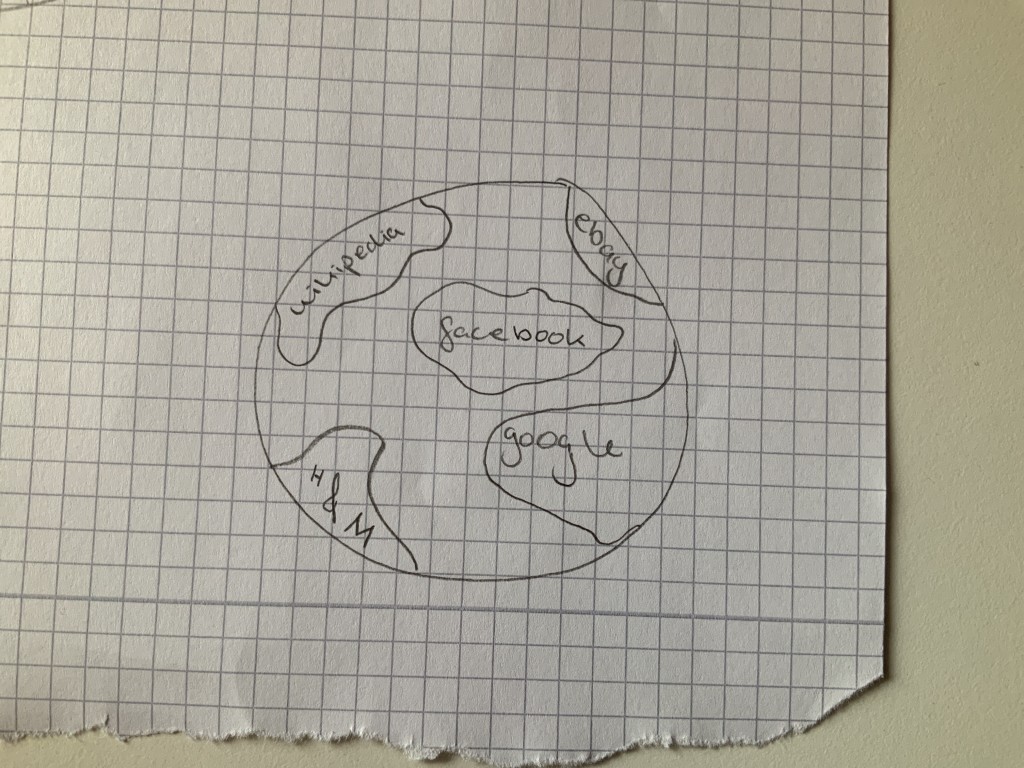

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

"And on [the] Ebay [continent] there s a country for clothes, and

,trousers , for example, would be a federal state in that

country."

Something that was unique about this interview was that she told me she had an

email address but she never writes emails. She only has an email account to

receive confirmation emails, for example when doing online shopping, or when

registering to a service and needing to confirm one s address. This is

interesting because it s an anti-spam measure that might become outdated with a

generation that uses email less or not at all.

Home network

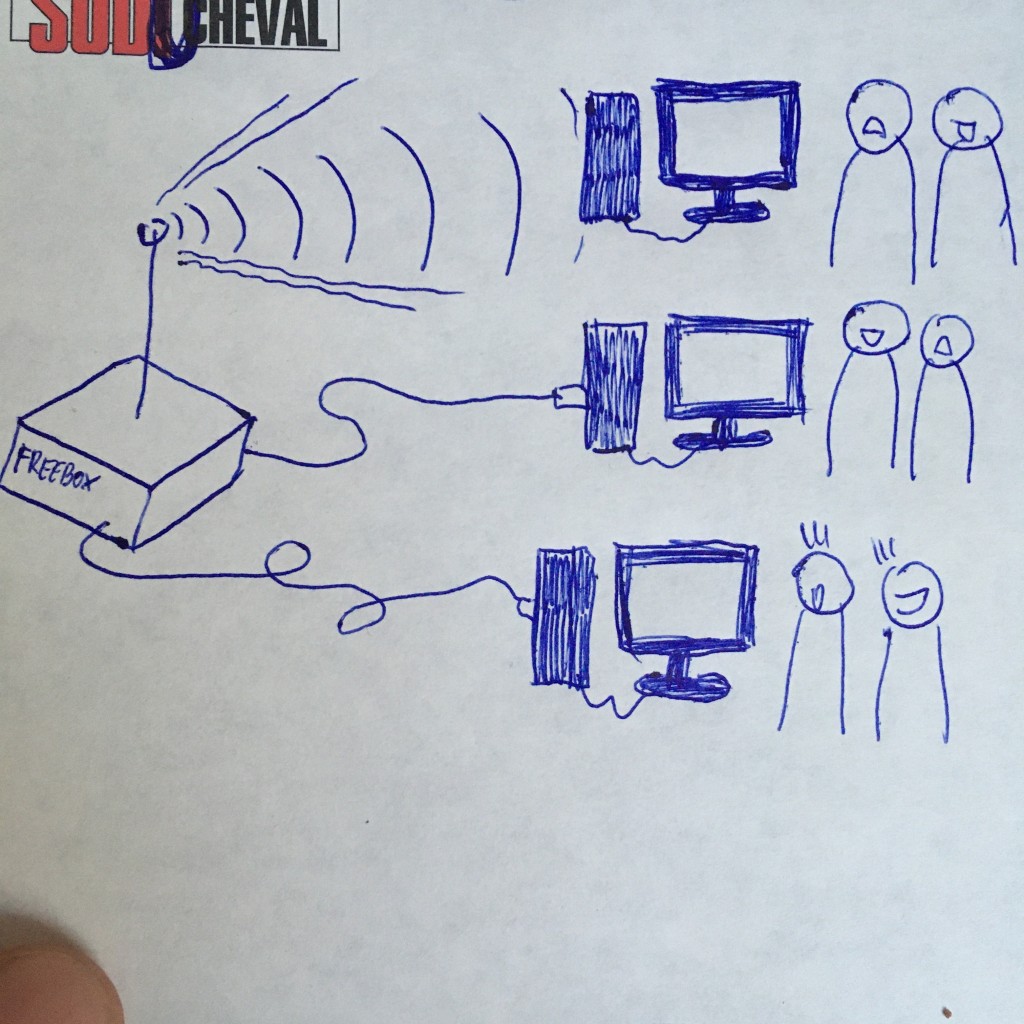

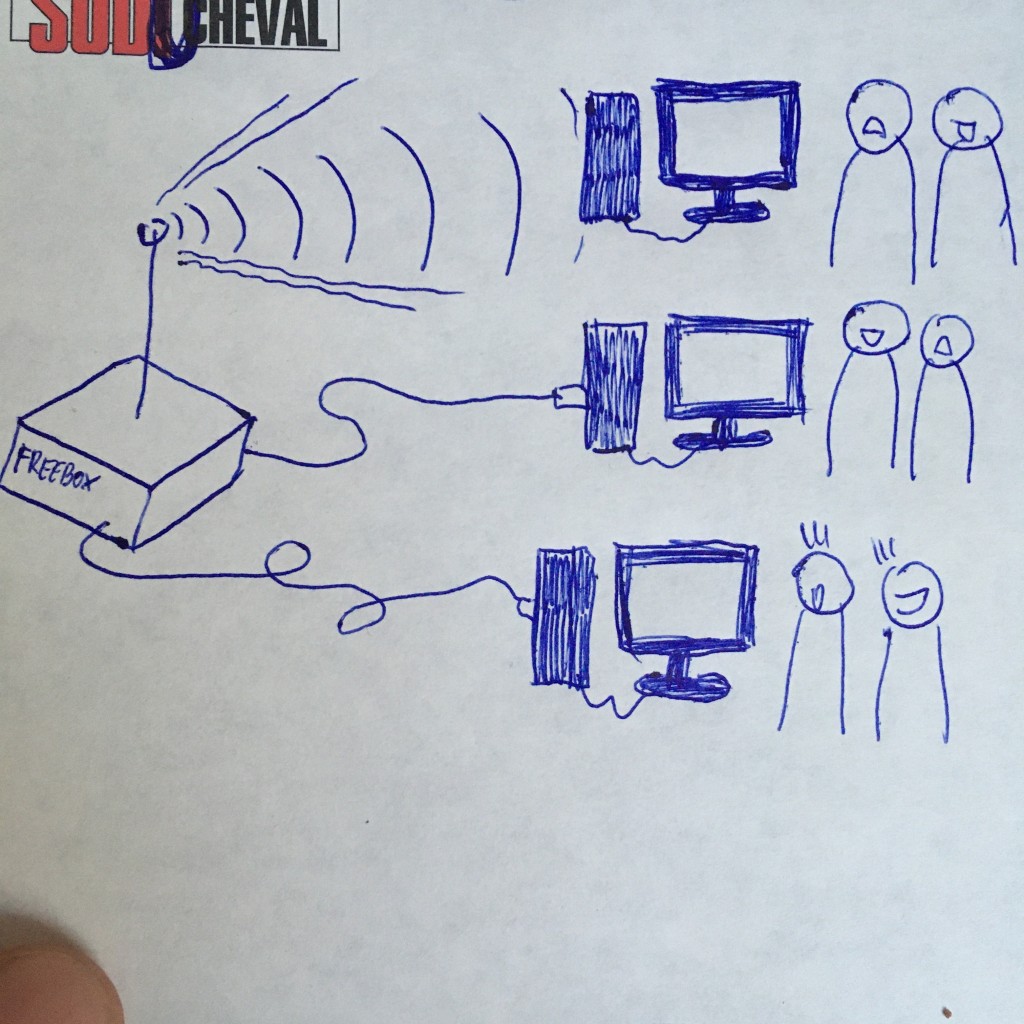

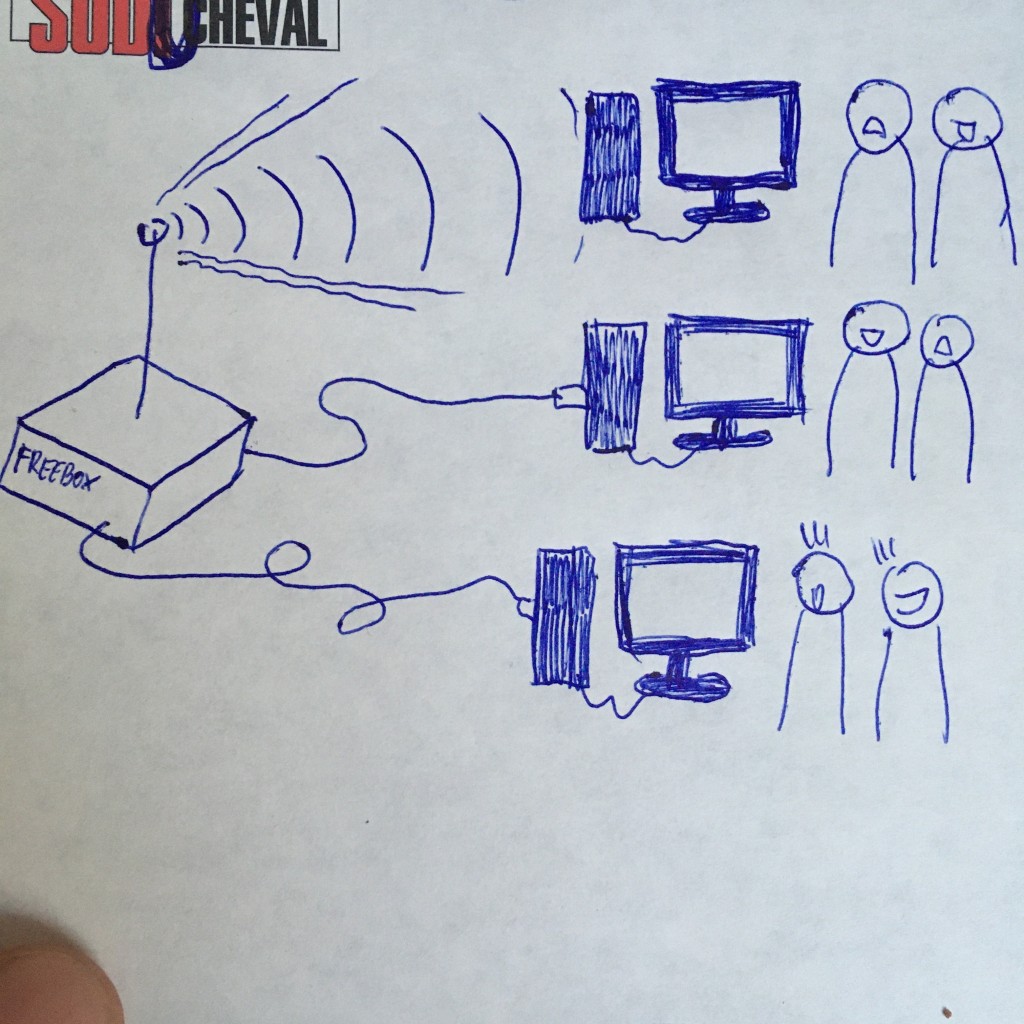

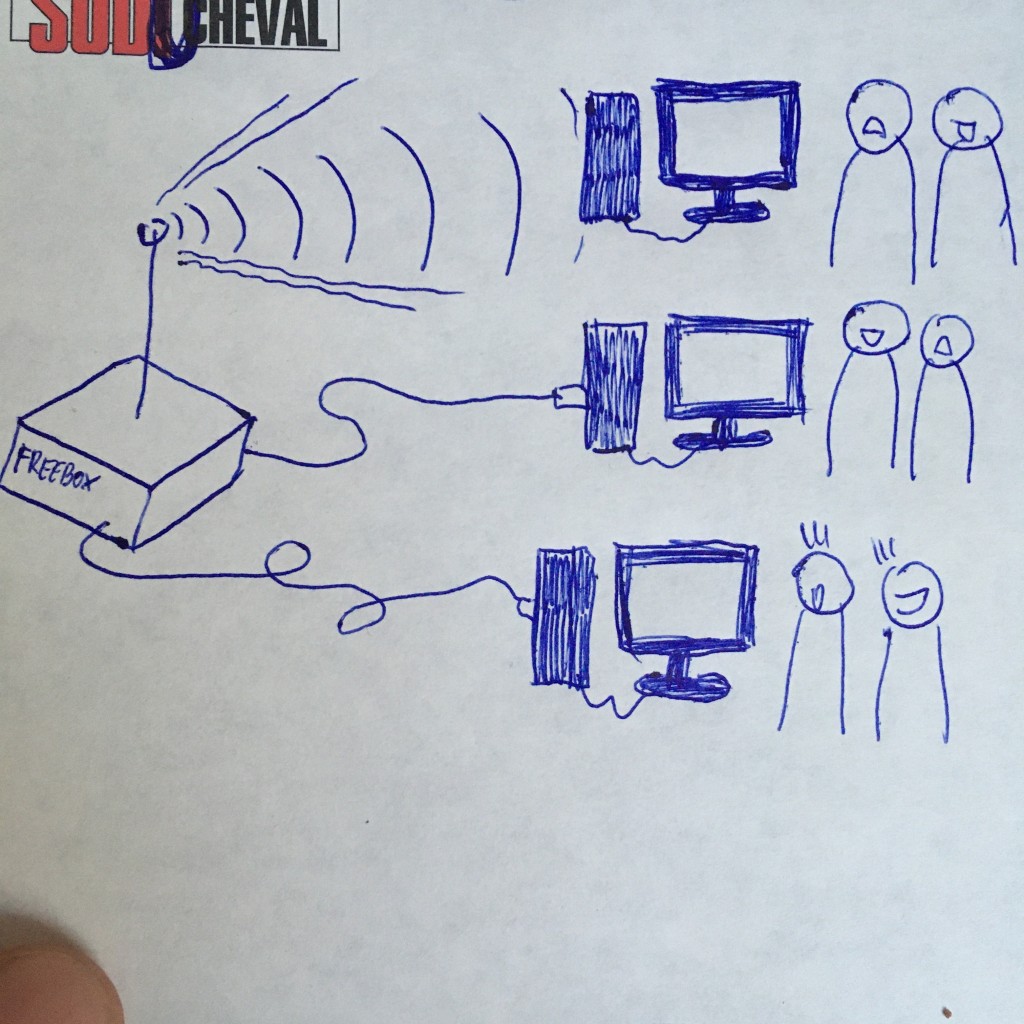

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

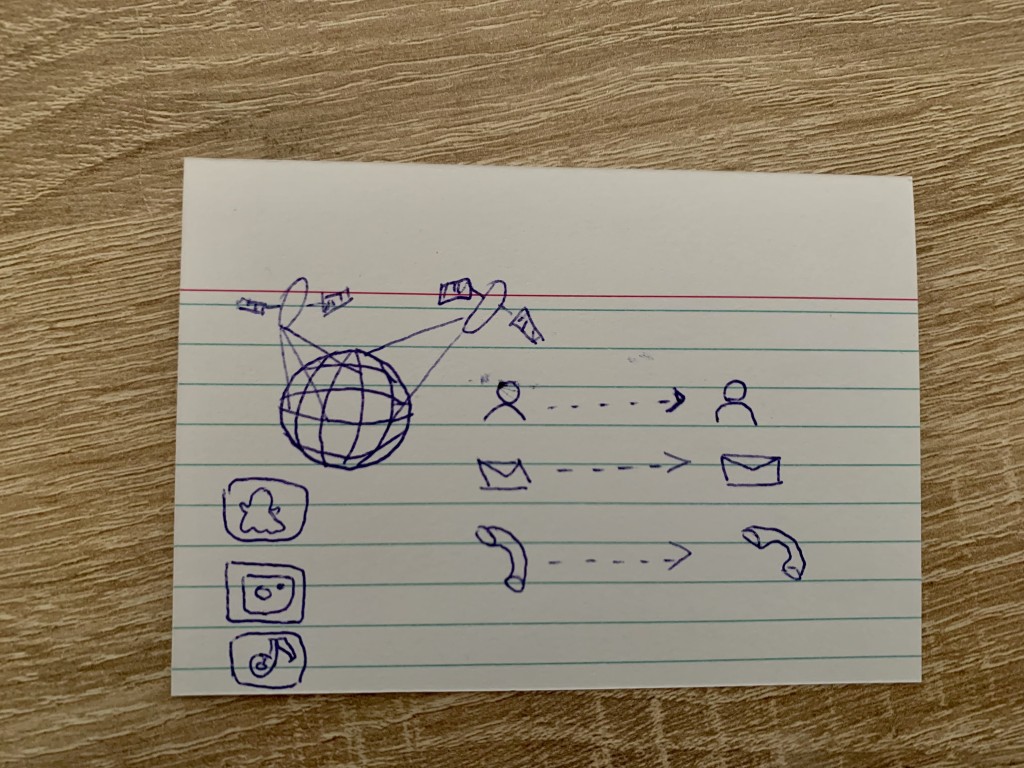

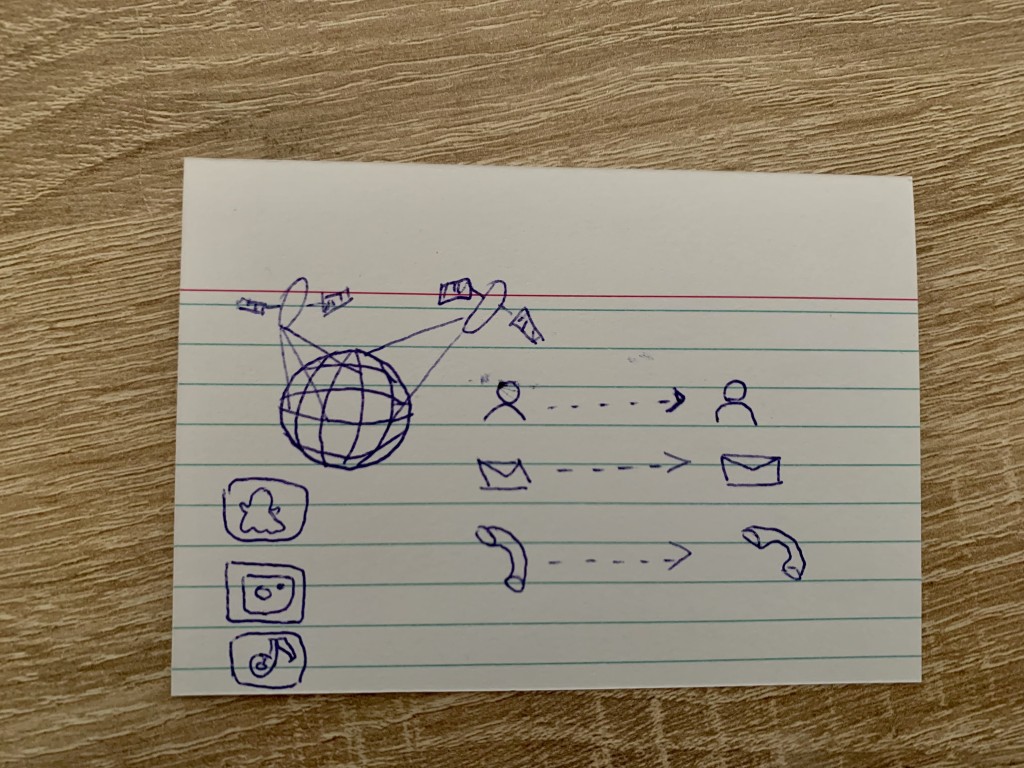

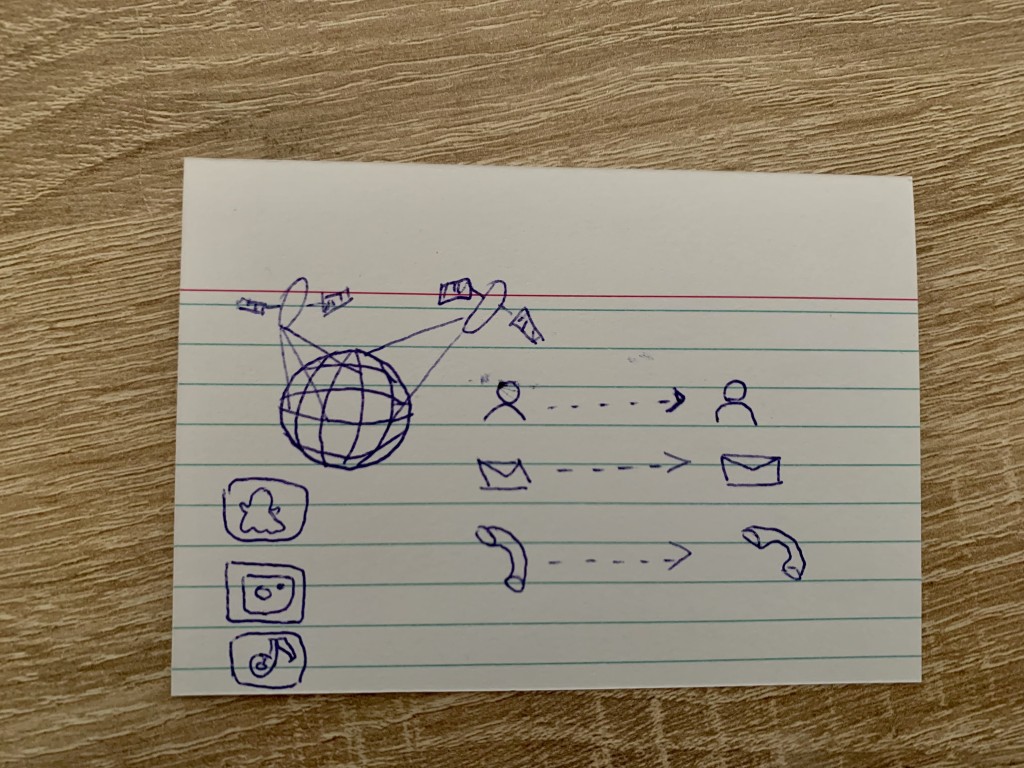

Satellite internet

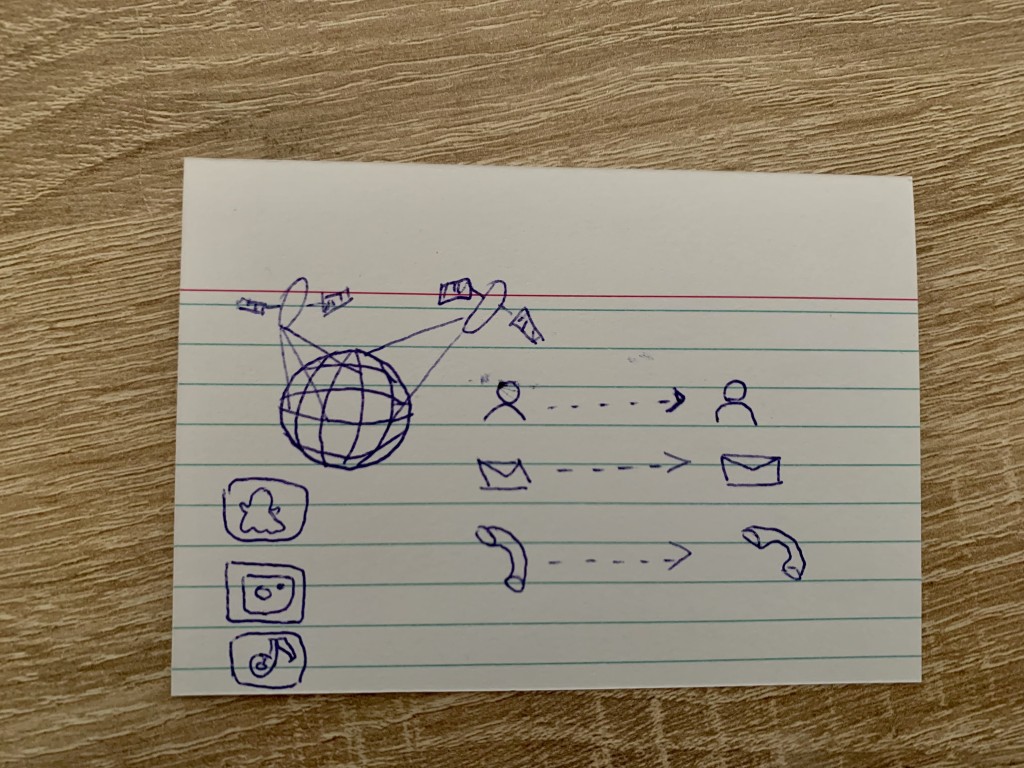

A 17 year old girl would explain the internet to an alien as follows:

A 17 year old girl would explain the internet to an alien as follows:

"The internet goes around the entire globe. One is networked with

everyone else on Earth. One can find everything. But one cannot touch the

internet. It s like a parallel world. With a device one can look into the

internet. With search engines, one can find anything in the world, one can

phone around the world, and write messages. [The internet] is a gigantic

thing."

This interviewee stated as the only one that the internet is huge. And

while she also is the only one who drew the internet as actually having

some kind of physical extension beyond her own home, she seems to

believe that internet connectivity is based on satellite technology and

wireless communication.

Imagine that a wise and friendly dragon could teach you one thing about the internet that you ve always wanted to know. What would you ask the dragon to teach you about?

A 10 year old boy said he d like to know how big are the servers behind all of

this . That s the only interview in which the word server came up.

A 12 year old girl said I would ask how to earn money with the internet. I

always wanted to know how this works, and where the money comes from. I love

the last part of her question!

The 15 year old boy for whom everything behind the home router is out of his

event horizon would ask How is it possible to be connected like we are? How

does the internet work scientifically?

A 17 year old girl said she d like to learn how the darknet works, what hidden

things are there? Is it possible to get spied on via the internet? Would it be

technically possible to influence devices in a way that one can listen to

secret or telecommanded devices?

Lastly, I wanted to learn about

what they find annoying, or problematic about the internet.

Imagine you could make the internet better for everyone. What would you do first?

Asked what she would change if she could, the 9 year old girl advocated for a

global usage limit of the internet in order to protect the human brain.

Also, she said, her parents spend way too much time on their phones and people

should rather spend more time with their children.

Three of the interviewees agreed that they see way too many advertisements and

two of them would like ads to disappear entirely from the web. The other one

said that she doesn t want to see ads, but that ads are fine if she can at

least click them away.

The 15 year old boy had different ambitions. He told me he would change:

"the age of access to the internet. More and more younger people

access the internet ; especially with TikTok there is a recommendation

algorithm that can influcence young people a lot. And influencing young

people should be avoided but the internet does it too much. And that can be

negative. If you don t yet have a critical spirit, and you watch certain

videos you cannot yet moderate your stance. It can influence you a lot.

There are so many things that have become indispensable and that happen on

the internet and we have become dependent. What happens if one day it

doesn t work anymore? If we connect more and more things to the net, that s not

a good thing."

The internet - Oh, that s what you mean!

On a sidenote, my first interview tentative was with an 8 year old girl

from my family. I asked her if she uses the internet and she denied, so

I abandoned interviewing her. Some days later, while talking to her, she

proposed to look something up on Google, using her smartphone. I said:

Oh, so you are using the internet! She replied: Oh, that s

what you re talking about?

I think she knows the word Google and she knows that she can search

for information with this Google thing. But it appeared that she

doesn t know that the Google search engine is located somewhere else on

internet and not on her smartphone. I concluded that for her, using the

services on the smartphone is as natural as switching on a light in

the house: we also don t think about where the electricity comes from

when we do that.

What can we learn from these few interviews?

Unsurprisingly, social media, streaming, entertainment, and instant messaging

are the main activities kids undertake on the internet. They are completely at

the mercy of advertisements in apps and on websites, not knowing how to get rid

of them. They interact on a daily basis with algorithms that are unregulated

and known to perpetuate discrimination and to create filter bubbles, without

necessarily being aware of it. The kids I interviewed act as mere service users

and seem to be mostly confined to specific apps or websites.

All of them perceived the internet as being something intangible. Only the

older interviewees perceived that there must be some kind of physical expansion

to it: the 17 year old girl by drawing a network of satellites around the

globe, the 15 year old boy by drawing the local network in his home.

To be continued

If aliens had landed on Earth and would ask you what the internet is, what would you explain to them?

The majority of respondents agreed in their replies that the internet is

intangible while still being a place where one can do anything and

everything .

Before I tell you more about their detailed answers to the above question, let

me show you how they visualize their internet.

If you had to make a drawing to explain to a person what the internet is, how would this drawing look like?

Each interviewee had some minutes to come up with a drawing.

As you will see, that drawing corresponds to what the kids would want an

alien to know about the internet and how they are using the internet

themselves.

Movies, series, videos

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

"Internet is a er one cannot touch it it s an, er [I propose

the word idea ], yes it s an idea. Many people use it not necessarily to

watch things, but also to read things or do other stuff."

User interface elements

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

"You can use the internet to learn things or get information,

listen to music, watch movies, and chat with friends. You can do nearly

anything with it."

Another planet

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

"And on [the] Ebay [continent] there s a country for clothes, and

,trousers , for example, would be a federal state in that

country."

Something that was unique about this interview was that she told me she had an

email address but she never writes emails. She only has an email account to

receive confirmation emails, for example when doing online shopping, or when

registering to a service and needing to confirm one s address. This is

interesting because it s an anti-spam measure that might become outdated with a

generation that uses email less or not at all.

Home network

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

Satellite internet

A 17 year old girl would explain the internet to an alien as follows:

A 17 year old girl would explain the internet to an alien as follows:

"The internet goes around the entire globe. One is networked with

everyone else on Earth. One can find everything. But one cannot touch the

internet. It s like a parallel world. With a device one can look into the

internet. With search engines, one can find anything in the world, one can

phone around the world, and write messages. [The internet] is a gigantic

thing."

This interviewee stated as the only one that the internet is huge. And

while she also is the only one who drew the internet as actually having

some kind of physical extension beyond her own home, she seems to

believe that internet connectivity is based on satellite technology and

wireless communication.

Imagine that a wise and friendly dragon could teach you one thing about the internet that you ve always wanted to know. What would you ask the dragon to teach you about?

A 10 year old boy said he d like to know how big are the servers behind all of

this . That s the only interview in which the word server came up.

A 12 year old girl said I would ask how to earn money with the internet. I

always wanted to know how this works, and where the money comes from. I love

the last part of her question!

The 15 year old boy for whom everything behind the home router is out of his

event horizon would ask How is it possible to be connected like we are? How

does the internet work scientifically?

A 17 year old girl said she d like to learn how the darknet works, what hidden

things are there? Is it possible to get spied on via the internet? Would it be

technically possible to influence devices in a way that one can listen to

secret or telecommanded devices?

Lastly, I wanted to learn about

what they find annoying, or problematic about the internet.

Imagine you could make the internet better for everyone. What would you do first?

Asked what she would change if she could, the 9 year old girl advocated for a

global usage limit of the internet in order to protect the human brain.

Also, she said, her parents spend way too much time on their phones and people

should rather spend more time with their children.

Three of the interviewees agreed that they see way too many advertisements and

two of them would like ads to disappear entirely from the web. The other one

said that she doesn t want to see ads, but that ads are fine if she can at

least click them away.

The 15 year old boy had different ambitions. He told me he would change:

"the age of access to the internet. More and more younger people

access the internet ; especially with TikTok there is a recommendation

algorithm that can influcence young people a lot. And influencing young

people should be avoided but the internet does it too much. And that can be

negative. If you don t yet have a critical spirit, and you watch certain

videos you cannot yet moderate your stance. It can influence you a lot.

There are so many things that have become indispensable and that happen on

the internet and we have become dependent. What happens if one day it

doesn t work anymore? If we connect more and more things to the net, that s not

a good thing."

The internet - Oh, that s what you mean!

On a sidenote, my first interview tentative was with an 8 year old girl

from my family. I asked her if she uses the internet and she denied, so

I abandoned interviewing her. Some days later, while talking to her, she

proposed to look something up on Google, using her smartphone. I said:

Oh, so you are using the internet! She replied: Oh, that s

what you re talking about?

I think she knows the word Google and she knows that she can search

for information with this Google thing. But it appeared that she

doesn t know that the Google search engine is located somewhere else on

internet and not on her smartphone. I concluded that for her, using the

services on the smartphone is as natural as switching on a light in

the house: we also don t think about where the electricity comes from

when we do that.

What can we learn from these few interviews?

Unsurprisingly, social media, streaming, entertainment, and instant messaging

are the main activities kids undertake on the internet. They are completely at

the mercy of advertisements in apps and on websites, not knowing how to get rid

of them. They interact on a daily basis with algorithms that are unregulated

and known to perpetuate discrimination and to create filter bubbles, without

necessarily being aware of it. The kids I interviewed act as mere service users

and seem to be mostly confined to specific apps or websites.

All of them perceived the internet as being something intangible. Only the

older interviewees perceived that there must be some kind of physical expansion

to it: the 17 year old girl by drawing a network of satellites around the

globe, the 15 year old boy by drawing the local network in his home.

To be continued

Movies, series, videos

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

The youngest respondent, a 9 year old girl, drew a screen with lots of people

around it and the words film, series, network, video , as well as a play

icon. She said that she mostly uses the internet to watch movies. She was the

only one who used a shared tablet and smartphone that belonged to her family,

not to herself. And she would explain the net like this to an alien:

"Internet is a er one cannot touch it it s an, er [I propose

the word idea ], yes it s an idea. Many people use it not necessarily to

watch things, but also to read things or do other stuff."

User interface elements

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

"You can use the internet to learn things or get information,

listen to music, watch movies, and chat with friends. You can do nearly

anything with it."

Another planet

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

"And on [the] Ebay [continent] there s a country for clothes, and

,trousers , for example, would be a federal state in that

country."

Something that was unique about this interview was that she told me she had an

email address but she never writes emails. She only has an email account to

receive confirmation emails, for example when doing online shopping, or when

registering to a service and needing to confirm one s address. This is

interesting because it s an anti-spam measure that might become outdated with a

generation that uses email less or not at all.

Home network

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

Satellite internet

A 17 year old girl would explain the internet to an alien as follows:

A 17 year old girl would explain the internet to an alien as follows:

"The internet goes around the entire globe. One is networked with

everyone else on Earth. One can find everything. But one cannot touch the

internet. It s like a parallel world. With a device one can look into the

internet. With search engines, one can find anything in the world, one can

phone around the world, and write messages. [The internet] is a gigantic

thing."

This interviewee stated as the only one that the internet is huge. And

while she also is the only one who drew the internet as actually having

some kind of physical extension beyond her own home, she seems to

believe that internet connectivity is based on satellite technology and

wireless communication.

Imagine that a wise and friendly dragon could teach you one thing about the internet that you ve always wanted to know. What would you ask the dragon to teach you about?

A 10 year old boy said he d like to know how big are the servers behind all of

this . That s the only interview in which the word server came up.

A 12 year old girl said I would ask how to earn money with the internet. I

always wanted to know how this works, and where the money comes from. I love

the last part of her question!

The 15 year old boy for whom everything behind the home router is out of his

event horizon would ask How is it possible to be connected like we are? How

does the internet work scientifically?

A 17 year old girl said she d like to learn how the darknet works, what hidden

things are there? Is it possible to get spied on via the internet? Would it be

technically possible to influence devices in a way that one can listen to

secret or telecommanded devices?

Lastly, I wanted to learn about

what they find annoying, or problematic about the internet.

Imagine you could make the internet better for everyone. What would you do first?

Asked what she would change if she could, the 9 year old girl advocated for a

global usage limit of the internet in order to protect the human brain.

Also, she said, her parents spend way too much time on their phones and people

should rather spend more time with their children.

Three of the interviewees agreed that they see way too many advertisements and

two of them would like ads to disappear entirely from the web. The other one

said that she doesn t want to see ads, but that ads are fine if she can at

least click them away.

The 15 year old boy had different ambitions. He told me he would change:

"the age of access to the internet. More and more younger people

access the internet ; especially with TikTok there is a recommendation

algorithm that can influcence young people a lot. And influencing young

people should be avoided but the internet does it too much. And that can be

negative. If you don t yet have a critical spirit, and you watch certain

videos you cannot yet moderate your stance. It can influence you a lot.

There are so many things that have become indispensable and that happen on

the internet and we have become dependent. What happens if one day it

doesn t work anymore? If we connect more and more things to the net, that s not

a good thing."

The internet - Oh, that s what you mean!

On a sidenote, my first interview tentative was with an 8 year old girl

from my family. I asked her if she uses the internet and she denied, so

I abandoned interviewing her. Some days later, while talking to her, she

proposed to look something up on Google, using her smartphone. I said:

Oh, so you are using the internet! She replied: Oh, that s

what you re talking about?

I think she knows the word Google and she knows that she can search

for information with this Google thing. But it appeared that she

doesn t know that the Google search engine is located somewhere else on

internet and not on her smartphone. I concluded that for her, using the

services on the smartphone is as natural as switching on a light in

the house: we also don t think about where the electricity comes from

when we do that.

What can we learn from these few interviews?

Unsurprisingly, social media, streaming, entertainment, and instant messaging

are the main activities kids undertake on the internet. They are completely at

the mercy of advertisements in apps and on websites, not knowing how to get rid

of them. They interact on a daily basis with algorithms that are unregulated

and known to perpetuate discrimination and to create filter bubbles, without

necessarily being aware of it. The kids I interviewed act as mere service users

and seem to be mostly confined to specific apps or websites.

All of them perceived the internet as being something intangible. Only the

older interviewees perceived that there must be some kind of physical expansion

to it: the 17 year old girl by drawing a network of satellites around the

globe, the 15 year old boy by drawing the local network in his home.

To be continued

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

A 10 year old boy represented the internet by recalling user interface

elements he sees every day in his drawing: a magnifying glass (search

engine), a play icon (video streaming), speech bubbles (instant

messaging). He would explain the internet like this to an alien:

"You can use the internet to learn things or get information, listen to music, watch movies, and chat with friends. You can do nearly anything with it."

Another planet

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

A 12 year old girl imagines the internet like a second, intangible, planet

where Google, Wikipedia, Facebook, Ebay, or H&M are continents that one enters

into.

"And on [the] Ebay [continent] there s a country for clothes, and

,trousers , for example, would be a federal state in that

country."

Something that was unique about this interview was that she told me she had an

email address but she never writes emails. She only has an email account to

receive confirmation emails, for example when doing online shopping, or when

registering to a service and needing to confirm one s address. This is

interesting because it s an anti-spam measure that might become outdated with a

generation that uses email less or not at all.

Home network

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

Satellite internet

A 17 year old girl would explain the internet to an alien as follows:

A 17 year old girl would explain the internet to an alien as follows:

"The internet goes around the entire globe. One is networked with

everyone else on Earth. One can find everything. But one cannot touch the

internet. It s like a parallel world. With a device one can look into the

internet. With search engines, one can find anything in the world, one can

phone around the world, and write messages. [The internet] is a gigantic

thing."

This interviewee stated as the only one that the internet is huge. And

while she also is the only one who drew the internet as actually having

some kind of physical extension beyond her own home, she seems to

believe that internet connectivity is based on satellite technology and

wireless communication.

Imagine that a wise and friendly dragon could teach you one thing about the internet that you ve always wanted to know. What would you ask the dragon to teach you about?

A 10 year old boy said he d like to know how big are the servers behind all of

this . That s the only interview in which the word server came up.

A 12 year old girl said I would ask how to earn money with the internet. I

always wanted to know how this works, and where the money comes from. I love

the last part of her question!

The 15 year old boy for whom everything behind the home router is out of his

event horizon would ask How is it possible to be connected like we are? How

does the internet work scientifically?

A 17 year old girl said she d like to learn how the darknet works, what hidden

things are there? Is it possible to get spied on via the internet? Would it be

technically possible to influence devices in a way that one can listen to

secret or telecommanded devices?

Lastly, I wanted to learn about

what they find annoying, or problematic about the internet.

Imagine you could make the internet better for everyone. What would you do first?

Asked what she would change if she could, the 9 year old girl advocated for a

global usage limit of the internet in order to protect the human brain.

Also, she said, her parents spend way too much time on their phones and people

should rather spend more time with their children.

Three of the interviewees agreed that they see way too many advertisements and

two of them would like ads to disappear entirely from the web. The other one

said that she doesn t want to see ads, but that ads are fine if she can at

least click them away.

The 15 year old boy had different ambitions. He told me he would change:

"the age of access to the internet. More and more younger people

access the internet ; especially with TikTok there is a recommendation

algorithm that can influcence young people a lot. And influencing young

people should be avoided but the internet does it too much. And that can be

negative. If you don t yet have a critical spirit, and you watch certain

videos you cannot yet moderate your stance. It can influence you a lot.

There are so many things that have become indispensable and that happen on

the internet and we have become dependent. What happens if one day it

doesn t work anymore? If we connect more and more things to the net, that s not

a good thing."

The internet - Oh, that s what you mean!

On a sidenote, my first interview tentative was with an 8 year old girl

from my family. I asked her if she uses the internet and she denied, so

I abandoned interviewing her. Some days later, while talking to her, she

proposed to look something up on Google, using her smartphone. I said:

Oh, so you are using the internet! She replied: Oh, that s

what you re talking about?

I think she knows the word Google and she knows that she can search

for information with this Google thing. But it appeared that she

doesn t know that the Google search engine is located somewhere else on

internet and not on her smartphone. I concluded that for her, using the

services on the smartphone is as natural as switching on a light in

the house: we also don t think about where the electricity comes from

when we do that.

What can we learn from these few interviews?

Unsurprisingly, social media, streaming, entertainment, and instant messaging

are the main activities kids undertake on the internet. They are completely at

the mercy of advertisements in apps and on websites, not knowing how to get rid

of them. They interact on a daily basis with algorithms that are unregulated

and known to perpetuate discrimination and to create filter bubbles, without

necessarily being aware of it. The kids I interviewed act as mere service users

and seem to be mostly confined to specific apps or websites.

All of them perceived the internet as being something intangible. Only the

older interviewees perceived that there must be some kind of physical expansion

to it: the 17 year old girl by drawing a network of satellites around the

globe, the 15 year old boy by drawing the local network in his home.

To be continued

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

A 15 year old boy knew that his family s devices are connected to a home router

(Freebox is a router from the French ISP Free) but lacked an imagination of the

rest of the internet s functioning. When I asked him about what would be behind

the router, on the other side, he said what s behind is like a black hole to

him. However, he was the only interviewee who did actually draw cables, wifi

waves, a router, and the local network. His drawing is even extremely precise,

it just lacks the cable connecting the router to the rest of the internet.

I've been digging through the firmware for an AMD laptop with a Ryzen 6000 that incorporates Pluton for the past couple of weeks, and I've got some rough conclusions. Note that these are extremely preliminary and may not be accurate, but I'm going to try to encourage others to look into this in more detail. For those of you at home, I'm using an image from

I've been digging through the firmware for an AMD laptop with a Ryzen 6000 that incorporates Pluton for the past couple of weeks, and I've got some rough conclusions. Note that these are extremely preliminary and may not be accurate, but I'm going to try to encourage others to look into this in more detail. For those of you at home, I'm using an image from  That s why a discussion space about saying NO did not seem out of place

at the feminist hackers assembly :) I based my workshop on the original,

created by the Institute of War and Peace Reporting and distributed

through their holistic security training manual.

I like this workshop because sharing happens in a small groups and has

an immediately felt effect. Several people reported that the exercises

allowed them to identify the exact moment when they had said yes to

something despite really having wanted to say no. The exercises from the

workshop can easily be done with a friend or trusted person, and they

can even be done alone by writing them down, although the effect in

writing might be less pronounced.

That s why a discussion space about saying NO did not seem out of place

at the feminist hackers assembly :) I based my workshop on the original,

created by the Institute of War and Peace Reporting and distributed

through their holistic security training manual.

I like this workshop because sharing happens in a small groups and has

an immediately felt effect. Several people reported that the exercises

allowed them to identify the exact moment when they had said yes to

something despite really having wanted to say no. The exercises from the

workshop can easily be done with a friend or trusted person, and they

can even be done alone by writing them down, although the effect in

writing might be less pronounced.

Reading

Reading

So, LibrePlanet, the FSF s conference, is coming!

I much enjoyed attending this conference in person in March 2018. This

year I submitted a talk again, and it got accepted of course, given

the conference is still 100% online, I doubt I will be able to go 100%

conference-mode (I hope to catch a couple of other talks, but well,

we are all eager to go back to how things were before 2020!)

So, LibrePlanet, the FSF s conference, is coming!

I much enjoyed attending this conference in person in March 2018. This

year I submitted a talk again, and it got accepted of course, given

the conference is still 100% online, I doubt I will be able to go 100%

conference-mode (I hope to catch a couple of other talks, but well,

we are all eager to go back to how things were before 2020!)

An even newer hot-fix release 1.0.8.3 of

An even newer hot-fix release 1.0.8.3 of